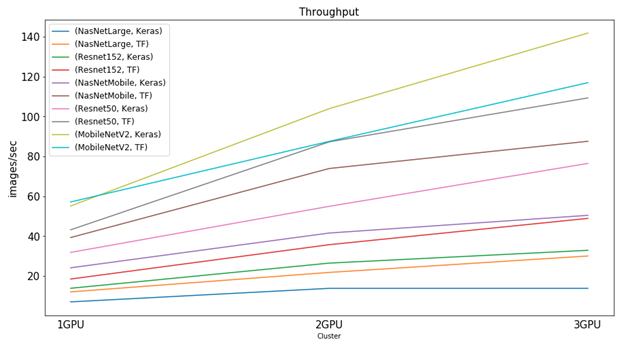

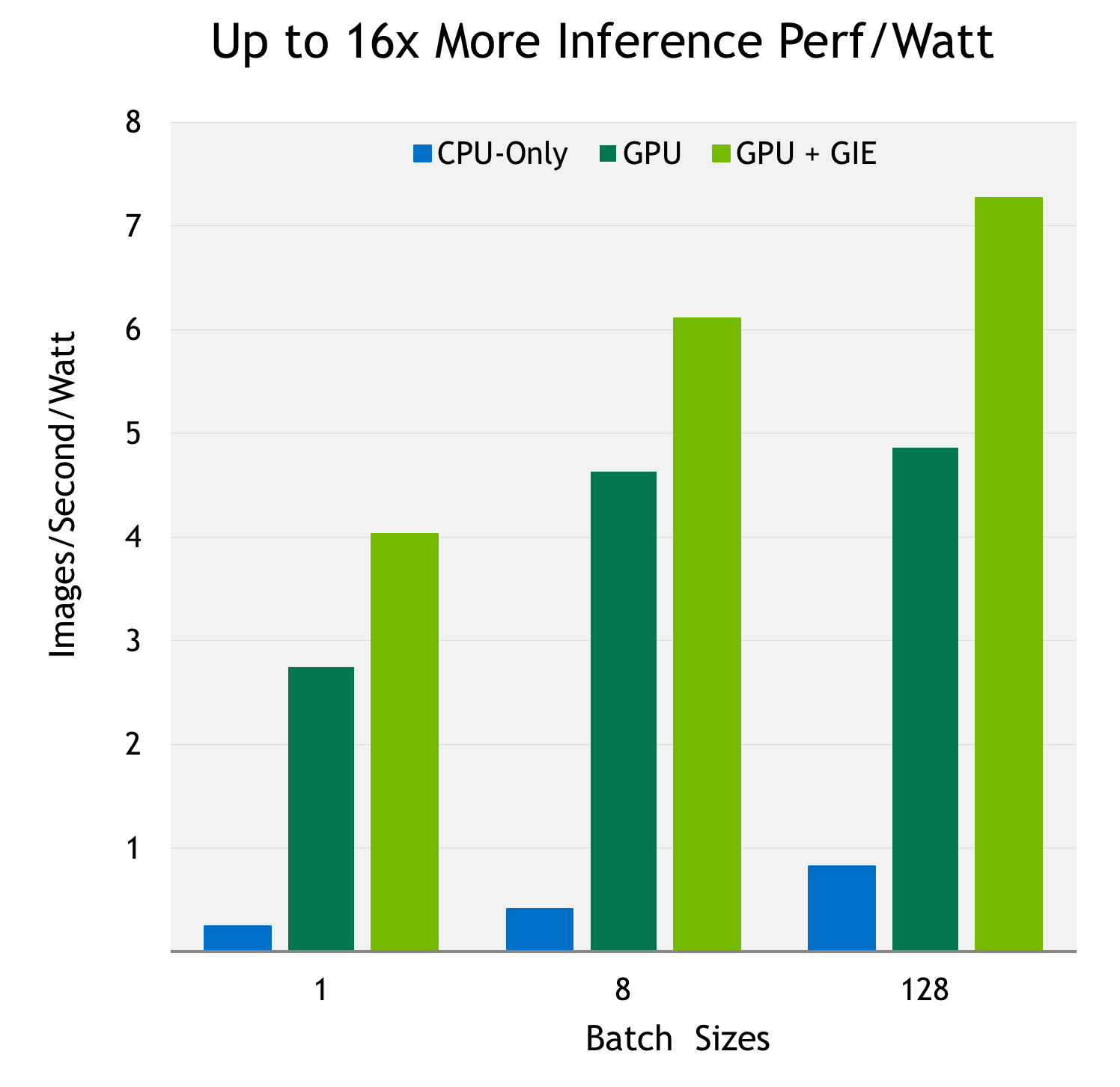

Sharing GPU for Machine Learning/Deep Learning on VMware vSphere with NVIDIA GRID: Why is it needed? And How to share GPU? - VROOM! Performance Blog

tensorflow - Why my deep learning model is not making use of GPU but working in CPU? - Stack Overflow

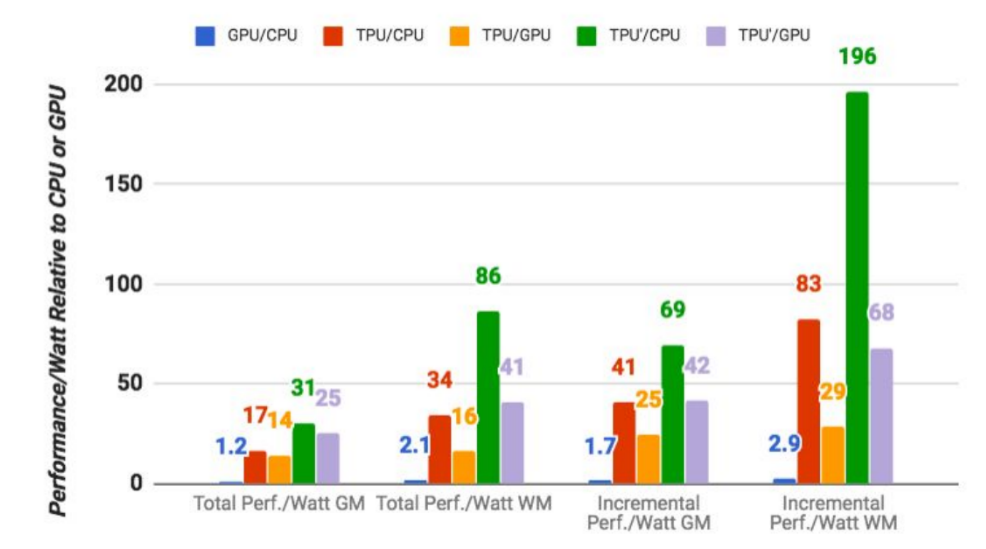

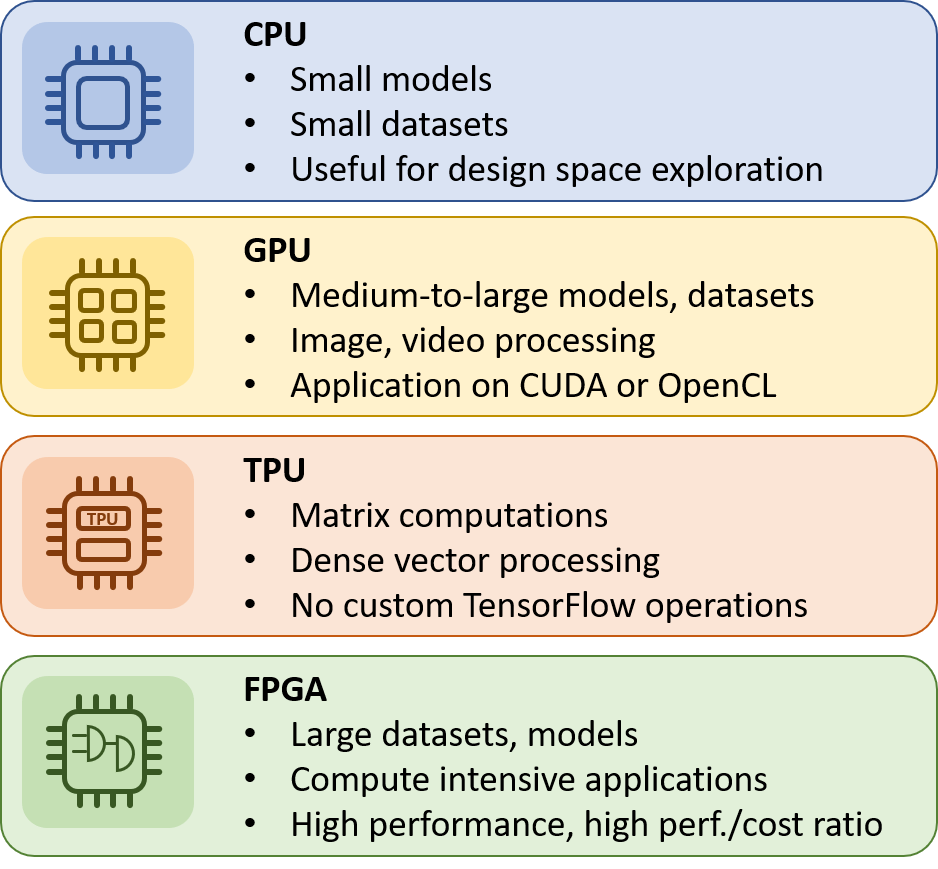

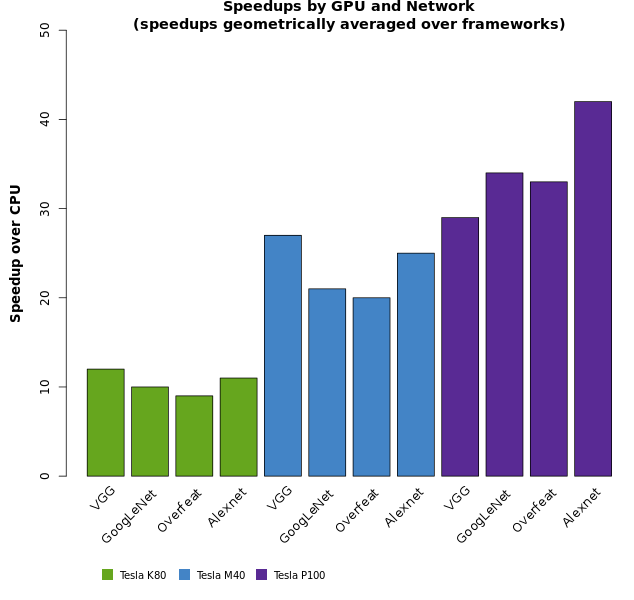

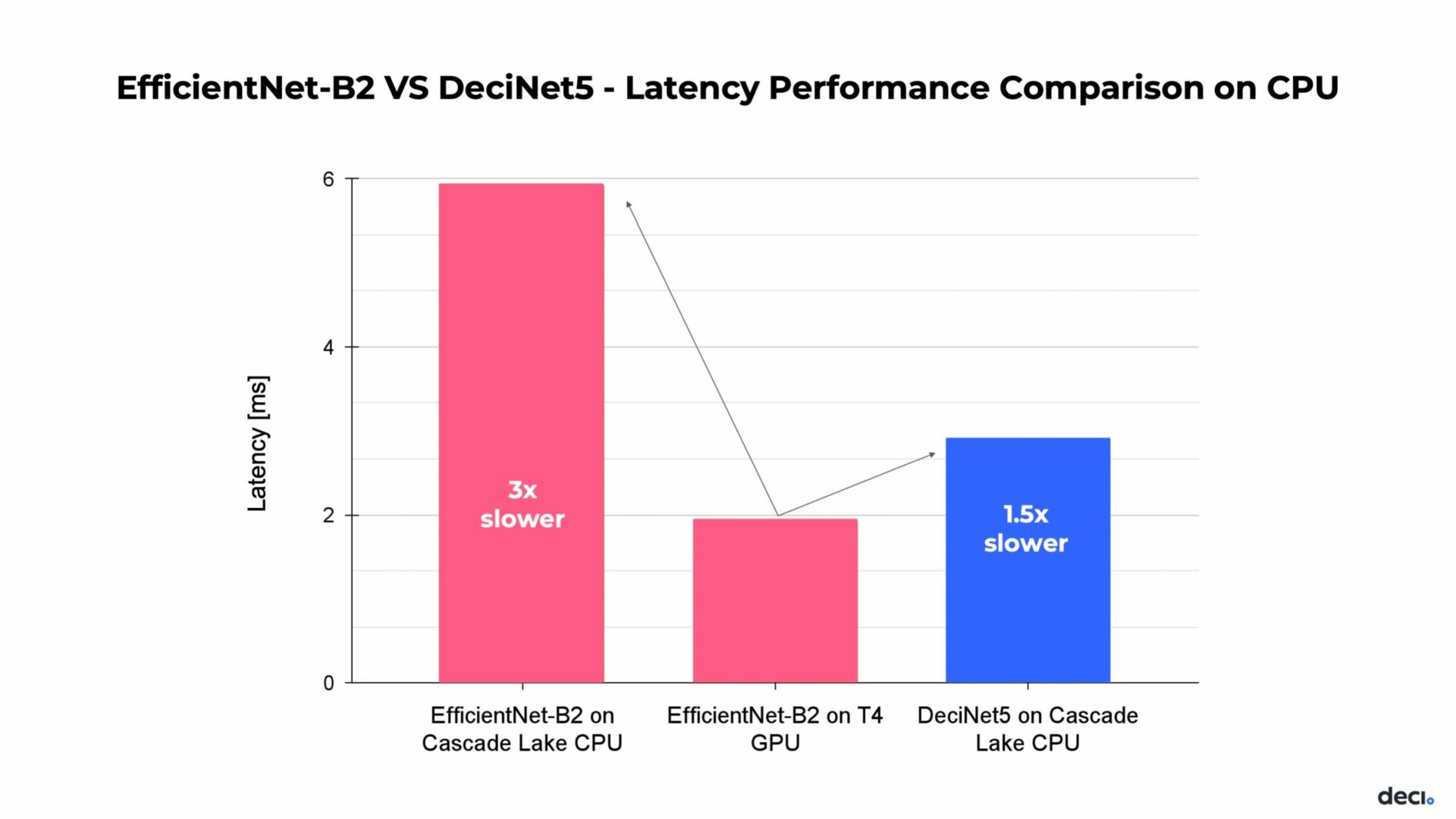

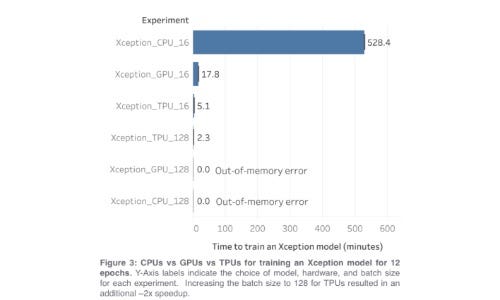

CPU vs GPU vs TPU and Feedback Loops in real world situations | by Inara Koppert-Anisimova | unpackAI | Medium